Scaling Document AI in Regulated Industries

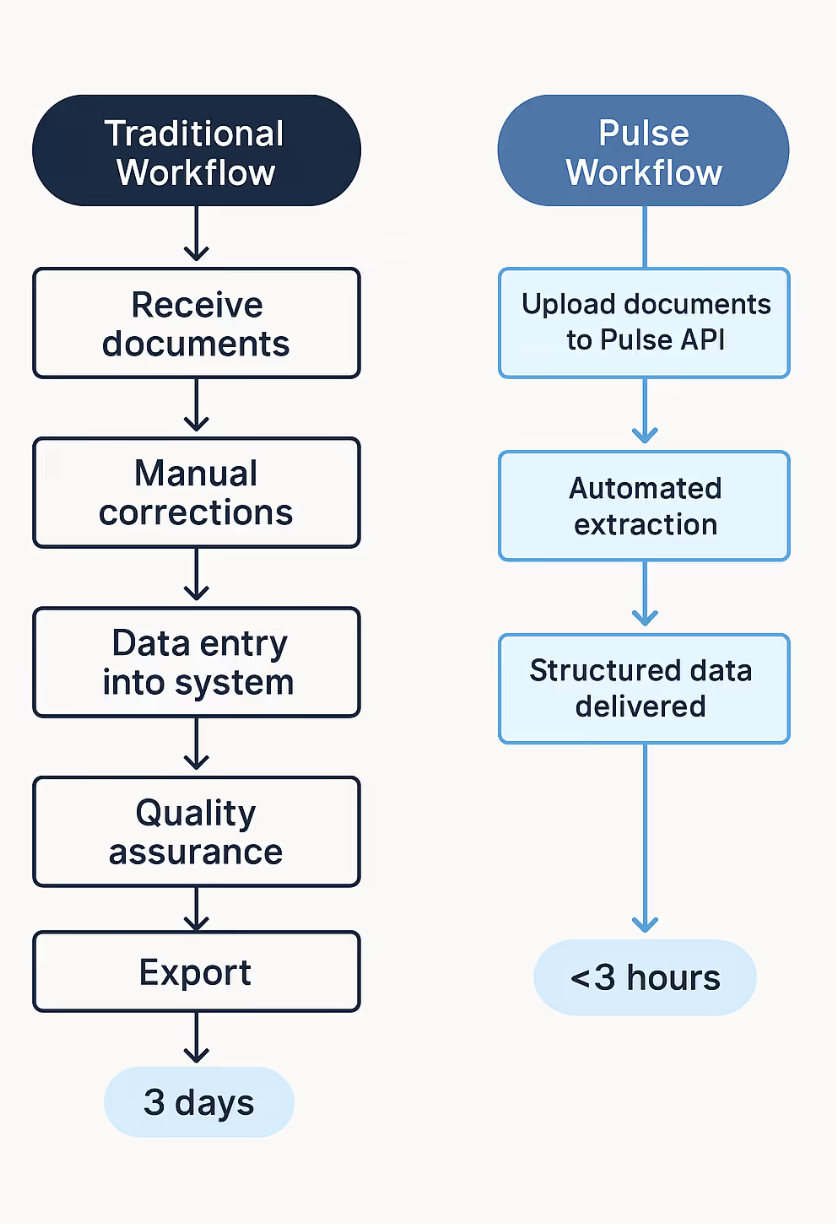

Most AI systems look convincing in pilots because pilots are small and controlled. Accuracy appears high when the workload is a few curated PDFs, and latency is predictable when throughput is capped at a few hundred pages. What those early demos never capture is what happens when a financial institution, an insurer, or a hospital network suddenly pushes millions of pages through in a matter of hours.

Regulated industries do not operate on smooth, steady streams of data. They operate in bursts. An insurer can receive thousands of claims at once following a natural disaster. A global bank might need to process every quarterly filing in parallel. A hospital group may digitize entire archives of scanned clinical records in a single migration. Under these conditions, systems that seemed robust during pilots often begin to fracture. Latency spikes, outputs drift, and subtle structural errors propagate silently across entire datasets.

Why Scale Breaks Trust in Regulated Sectors

In finance, insurance, and healthcare, it is not enough to be “mostly correct.” A decimal shift in a valuation table can distort portfolio models by millions. A misread coverage limit in a claims file creates legal exposure. A dosage unit dropped from a clinical note introduces a patient safety risk. What makes these errors more dangerous is that they rarely appear obvious; they emerge quietly at scale, where verification becomes impractical without a rigorous audit trail.

This is why bounding boxes and citations are not optional features. Every extracted value must be linked to its precise coordinates on the page, its surrounding header context, and the version of the model that produced it. Compliance officers and risk managers do not ask whether a system is ninety-five percent accurate. They ask whether, when challenged months later, every number can be traced back to its original location in the source document.

Why Single-Pass Models Collapse

Many modern systems promise one-pass extraction: upload a document, receive structured data. These architectures rarely survive enterprise scale. The reason is structural. Collapsing a two-dimensional layout into a linear token sequence inevitably discards relationships. Page breaks sever continuity across tables. Probabilistic decoding introduces run-to-run variability. During bursty loads, these weaknesses compound and cause inconsistencies that are impossible to defend in audit.

The Multi-Pass Approach

Scaling document AI in regulated industries requires a disciplined pipeline. In practice, this means decomposing the problem into multiple deterministic passes:

- OCR for characters, producing per-character bounding boxes with normalization.

- Layout or vision-language models for structure, reconstructing tables, headers, and cross-page continuity.

- Constraint validation, enforcing totals, units, and domain-specific checks.

- Lineage packaging, attaching coordinates, page IDs, and model version metadata to every extracted value.

This design makes outputs byte-identical for the same input and ensures that bursts of millions of documents can be processed without hidden drift. When the system is uncertain, it abstains and routes to review, rather than producing plausible but unverifiable guesses.

Scalability Redefined

In consumer AI, scalability usually means speed. In regulated industries, scalability is a composite requirement. It means systems must remain deterministic across quarters, provide auditability for every extracted field, remain stable under burst loads of millions of pages, and do so without degradation in accuracy or trustworthiness. Enterprises measure all of these dimensions, not just throughput.

Closing

The gap between pilots and production in document AI is not primarily a question of accuracy. It is a question of whether the system can survive the operational realities of regulated industries: unpredictable bursts, compliance scrutiny, and the need for deterministic, auditable outputs at scale. Systems that cannot meet this bar remain stuck in pilot purgatory. Systems that can are treated as infrastructure.