When you build AI for finance, you learn quickly that “99 percent accuracy” is not a useful benchmark. In many industries a one percent error is a nuisance. In finance that same one percent becomes a systematic bias that distorts valuation models, covenant tests, portfolio monitoring, and regulatory reporting.

The problem is not only accuracy. It is predictability. Financial documents require deterministic extraction. The same document parsed today, tomorrow, or next quarter should yield the same structured outputs, with the same field names, the same units, and the same source citations. If a system cannot offer that level of stability, the downstream data cannot be trusted.

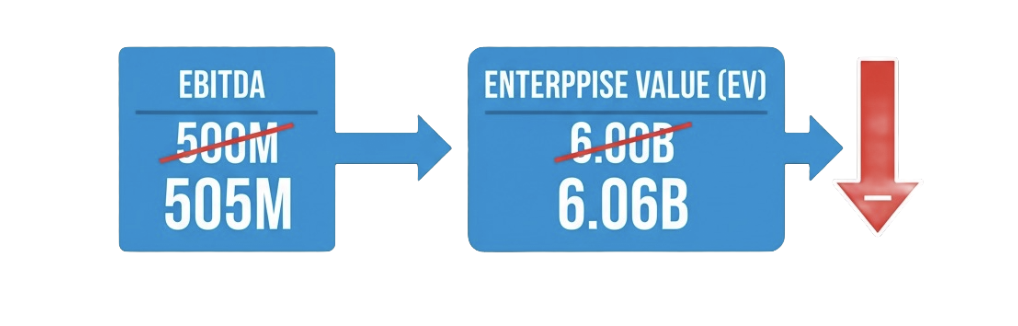

The one percent that moves markets

A simple example makes the stakes clear. Suppose a filing reports EBITDA of 500M and a model applies a 12x multiple. That implies 6.00B of enterprise value. If the extraction inflates EBITDA by one percent to 505M, the model now suggests 6.06B. A 60M swing appears without any change in fundamentals. That is a tangible cost of small, silent extraction errors.

Accuracy is not enough. Predictability is the benchmark.

Accuracy tells you whether the system got the right answer once. Predictability tells you whether it will behave the same way across time, across documents, and across model versions. Finance teams depend on longitudinal data. If a field is labeled EBITDA in Q1, Operating Income in Q2, and Adj. EBITDA in Q3, the dataset is already compromised even if each single extraction was “correct” in context.

Two properties separate production-ready systems from demos:

- Determinism. Given the same input and configuration, the system produces identical outputs.

- Lineage. Every output carries a verifiable path back to the source page and geometry.

Where unpredictability sneaks in

In our work with investment teams, insurers, banks, and buy-side technical teams, the same failure modes surface repeatedly.

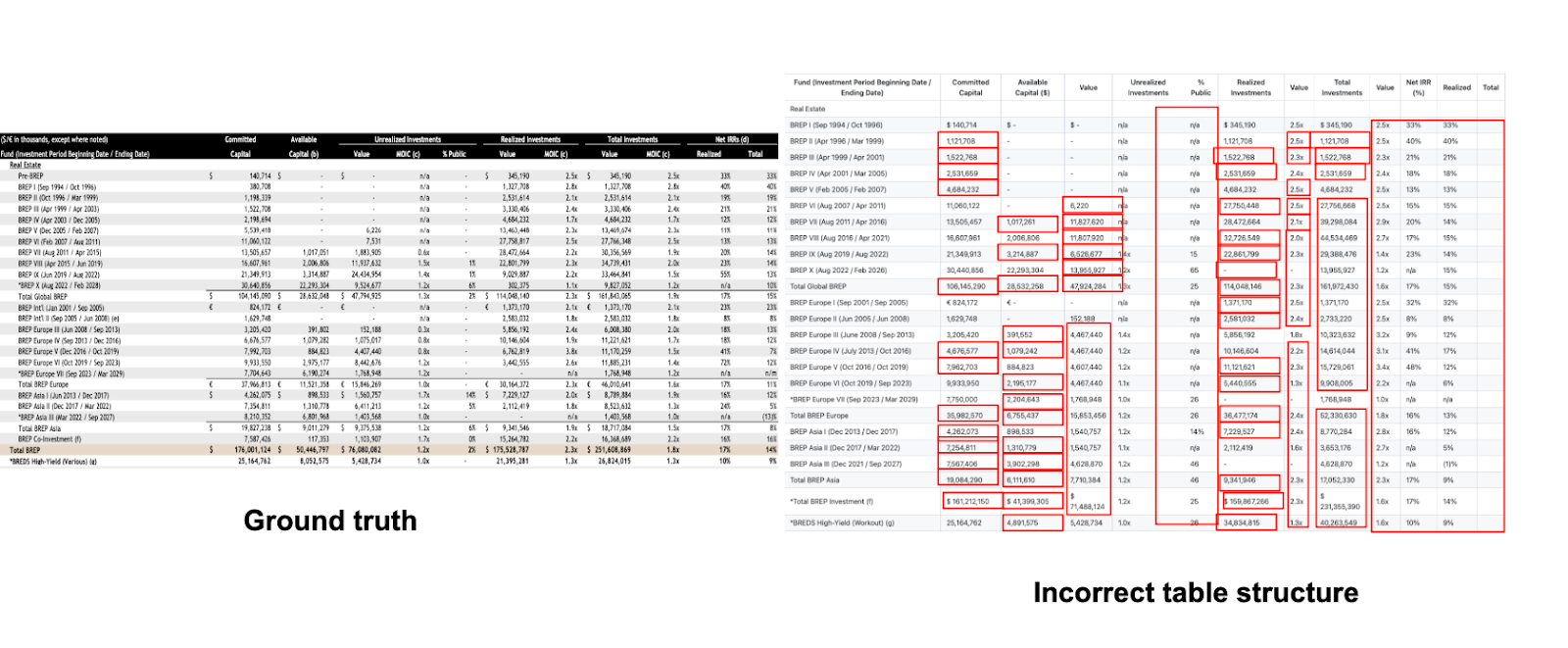

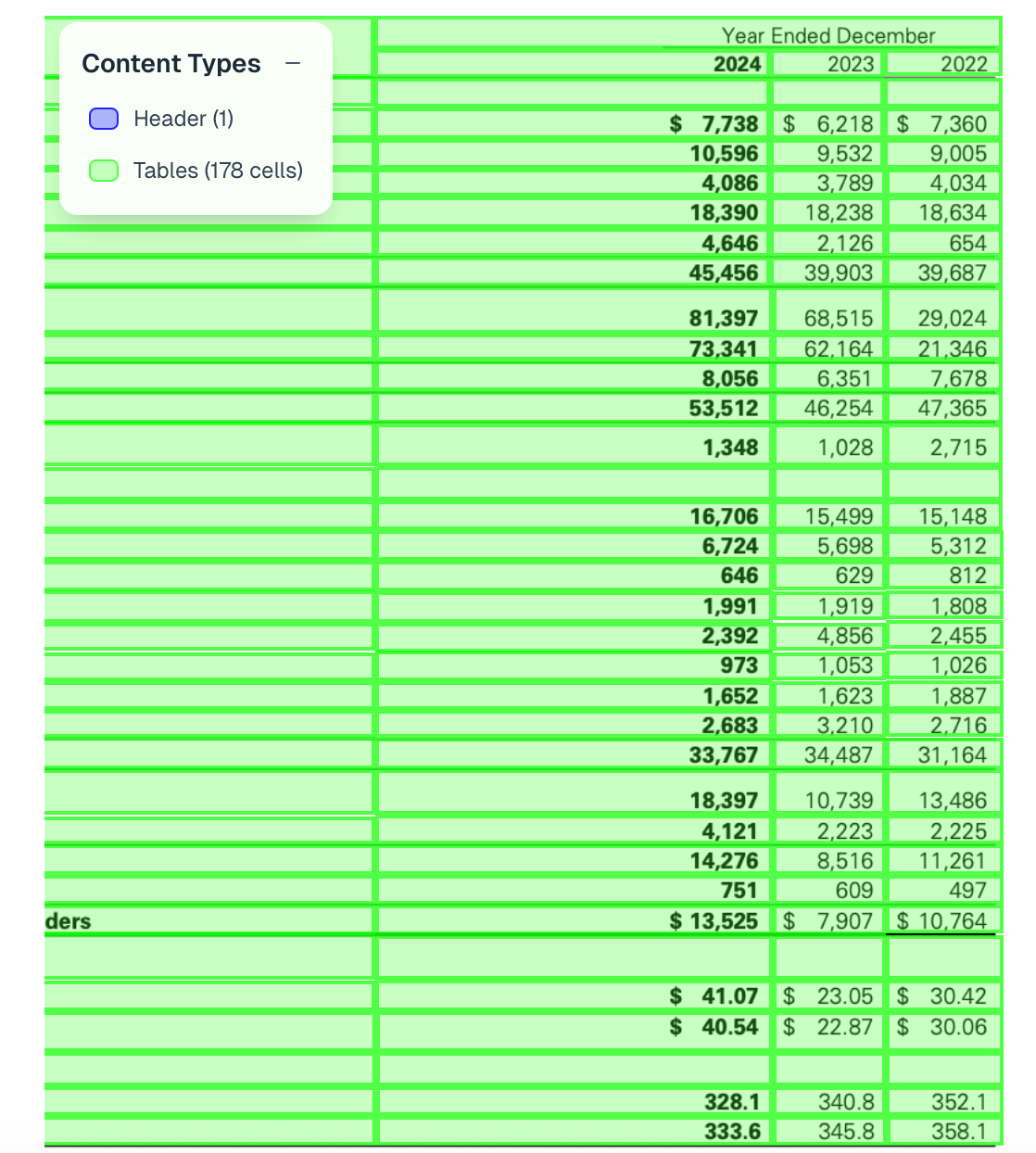

1) Table structure loss

Tables are the schema of finance. They encode hierarchies, multi-level headers, rollups, and constraints. Many general-purpose systems flatten two-dimensional structure into a one-dimensional token sequence. The result is that numbers are captured but their relationships are lost. Totals drift away from subtotals. Headers become detached from the cells they govern. Multi-page spreads break row continuity. What looks like “90 percent accurate” at the character level becomes unusable at the analytical level.

What matters is structure fidelity. Can the system preserve the geometry, spanning headers, row groups, and the cross-page continuation of a table. Can it maintain the binding between a cell and the header stack above it. Without this, numeric precision is irrelevant.

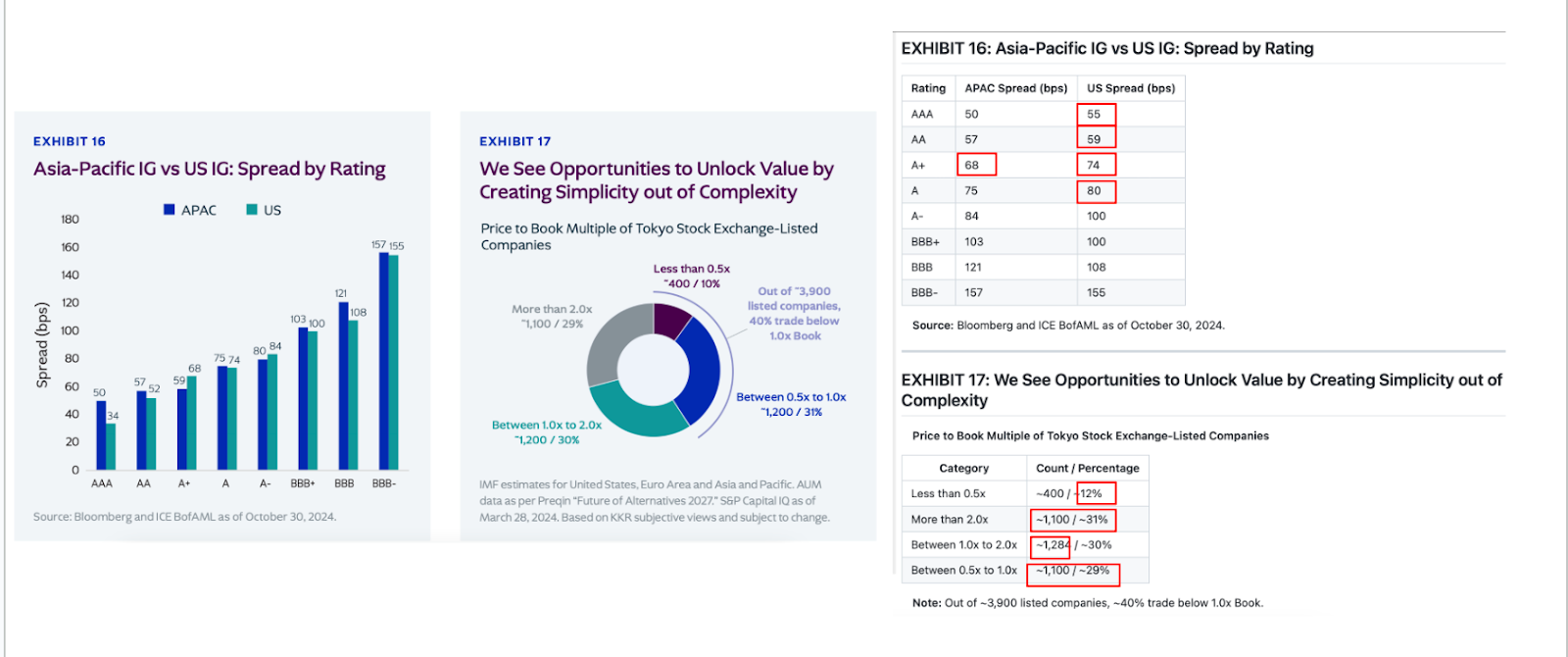

2) Numeric instability

Finance documents vary by locale and format. Common issues include decimal shifts, thousands separator confusion, currency markers disappearing, and unit normalization errors. For example, “1.234,56” versus “1,234.56” encode the same quantity under different conventions. A deterministic numeric layer must resolve these cases with rules, not guesses.

3) Model drift over time

Model or configuration changes that alter outputs break comparability. A pipeline that silently changes its interpretation of the same credit agreement between quarters creates operational noise and audit risk. Versioning, canary sets, and side-by-side diffs are essential to catch drift before it hits production.

4) Probabilistic substitution

Large language models are powerful for reasoning and summarization, but their probabilistic decoding can substitute statistically common tokens under uncertainty. If “Operating Income” appears more often in training data than “EBITDA,” a low-confidence pass can swap terms even when the ground truth says otherwise. In extraction, silent substitutions are worse than explicit failures.

Why bounding boxes and citations are non negotiable

Auditors and analysts need to verify where a value came from. A number without its location is an orphan. The fix is simple to state and non-trivial to execute: every extracted value must carry granular bounding boxes and page coordinates as a citation.

Granularity matters. Cell-level boxes should be distinct from character-level and line-level boxes. Merged cells should be represented explicitly rather than approximated. Multi-page tables should maintain row continuity with a stable identifier. With reliable geometry, downstream systems can re-derive context, re-check totals, and display a visual lineage trail.

Bounding boxes do more than help with audit. They enable deterministic re-validation. When totals do not reconcile, a validator can re-sum the exact cells by coordinates and flag the specific lines causing the break.

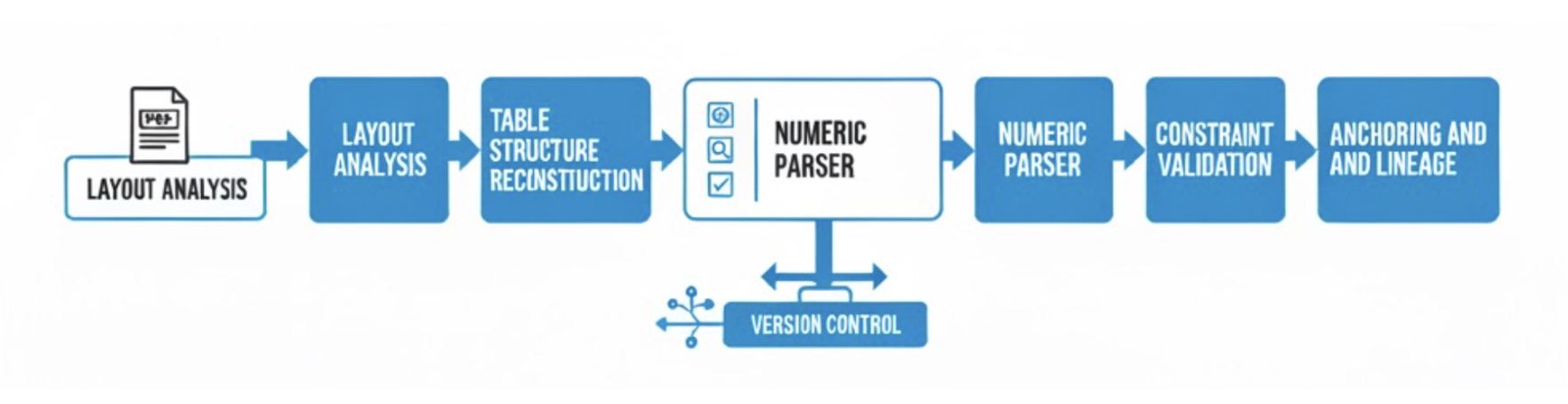

Engineering for deterministic precision

A precision-first pipeline looks different from a generic OCR or VLM demo. The following design choices are what we have found to work in practice.

Anchor everything

Attach bounding boxes, page numbers, and model version metadata to every field. Store the header stack path for each cell so that the semantic label and the structural context travel together.

Structure before semantics

Reconstruct table geometry first, including multi-level headers, column spans, row grouping, and cross-page stitching. Only then assign meaning. This avoids a common failure where a model labels values correctly but binds them to the wrong headers.

Deterministic numeric parsing

Implement locale-aware rules for decimals and thousands separators. Standardize units and currencies through explicit conversion tables. Avoid fuzzy heuristics for numeric inference.

Constraint enforcement

Apply accounting identities and reconciliation rules. Subtotals must sum to totals within a specified tolerance. Balance sheets must balance. Where the rule fails, abstain and escalate.

Abstain instead of guess

Low-confidence regions should not be filled with plausible text. Return an abstention with coordinates for review. Predictable abstention is easier to operationalize than unpredictable substitution.

Version control with diffs and rollback

Treat extraction like software releases. Maintain immutable configurations. Run a canary corpus during upgrades. Produce side-by-side diffs for business owners to approve. Roll back if structure fidelity or numeric stability regresses.

The Precision Tax

We describe the Precision Tax as the hidden cost paid when extractions are not deterministic and not citeable.

- Verification overhead. Analysts re-check numbers, confirm labels, and trace values back to documents. The time saved by automation is consumed by reconciliation.

- Data drift debt. Each unannounced model change creates subtle breaks in historical series that must be corrected downstream.

- Regulatory friction. Unreproducible results are hard to defend. Teams hold capital buffers and build compensating controls to mitigate uncertainty.

Small error rates multiply across millions of pages and thousands of tables. The cost is measured not only in hours, but in distorted analytics that shape real decisions.

What engineering should evaluate

Finance holds document AI to the same bar as core accounting systems. A practical set of questions helps separate a production system from a demo.

- Are outputs reproducible across time and versions.

- Does every value include a bounding box citation and page reference.

- Is there a clear abstain strategy under low confidence.

- How are decimals, separators, units, and currencies handled deterministically.

- Are table totals, hierarchies, and cross-page continuations enforced by constraints.

- Can model or configuration changes be validated with side-by-side diffs and rolled back safely.

These questions are not about declaring winners. They are about ensuring predictability, auditability, and long-term trust, which are the properties of real financial infrastructure.

Closing

Financial document AI is not a toy problem. It is an infrastructure problem. Investment teams, risk groups, and operations rely on stable, citeable data that stands up to audit years later. That standard is only met when extractions are predictable, table structures are preserved, and every number is anchored to its source with granular bounding boxes.

Precision is not a feature. Precision is the product.